diff options

| author | orivej <orivej@yandex-team.ru> | 2022-02-10 16:44:49 +0300 |

|---|---|---|

| committer | Daniil Cherednik <dcherednik@yandex-team.ru> | 2022-02-10 16:44:49 +0300 |

| commit | 718c552901d703c502ccbefdfc3c9028d608b947 (patch) | |

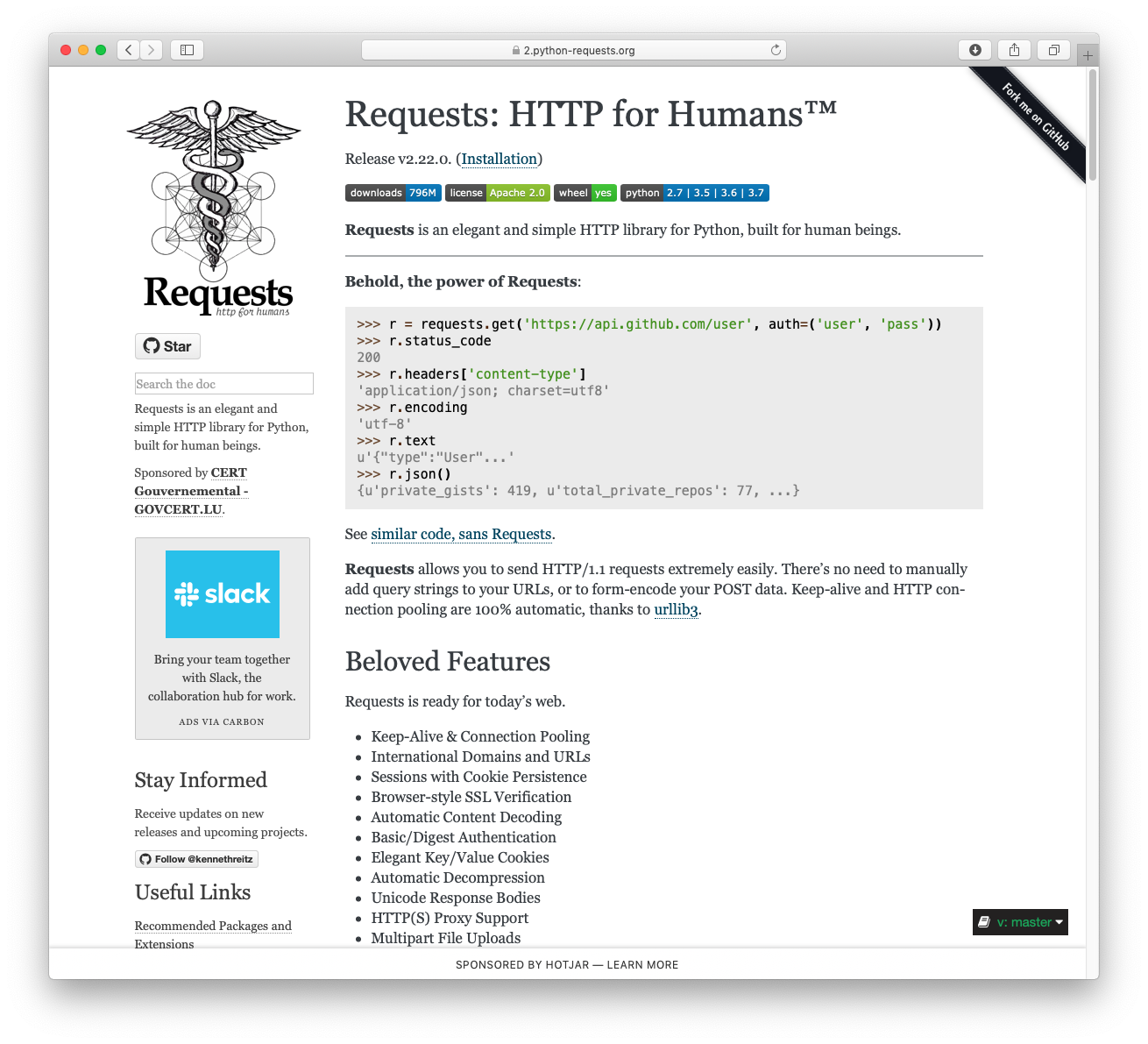

| tree | 46534a98bbefcd7b1f3faa5b52c138ab27db75b7 /contrib/python/requests | |

| parent | e9656aae26e0358d5378e5b63dcac5c8dbe0e4d0 (diff) | |

| download | ydb-718c552901d703c502ccbefdfc3c9028d608b947.tar.gz | |

Restoring authorship annotation for <orivej@yandex-team.ru>. Commit 1 of 2.

Diffstat (limited to 'contrib/python/requests')

21 files changed, 1462 insertions, 1462 deletions